Introduction

How would people process the chatbot’s health information for different diseases? Will they trust the chatbot’s ability to give personalized suggestions? Will they avoid the chatbot’s empathic caring?

To address this concern, we conduct this research to explain the human-chatbot relationship in health context, to explore user’s acceptance of chatbot’s empathy expression and personalized suggestion across different diseases.

Independent Variables

Empathy expression:

- In doctor-patient communication, scholars posited that there are two types of interaction analysis systems: the ‘cure’ system and the ‘care system’.In the ‘care’ system the process is mainly emphasized on affective or socioemotional behavior, where expressing empathy is crucial.

- However, expressing empathy in sensitive disease contexts may trigger an Uncanny Valley Model for people who believe that AI possesses intelligence.

Personalized suggestion:

- There are several purposes for doctor-patient communication, through which purposes, the exchange of information benefits both doctors and patients. Therefore, through information exchange, doctors ought to elicit patients’ perceptions of the illness and their feelings regarding the disease and further give personalized suggestions.

- However, today personalized recommendations are combined with privatized modern data-driven systems that become centers of personal data collection and processing, thereby increasing the perceived privacy costs and potential risks to consumers.

Disease sensitivity:

- The potential harm that may occur when sharing sensitive health information publicly through media(Betsch, 2015)

- In addition to people already feeling ambivalent about behavior change, sexual health adds an extra layer of discomfort as this can be a particularly sensitive, intimate, and stigmatized topic.

Experiment

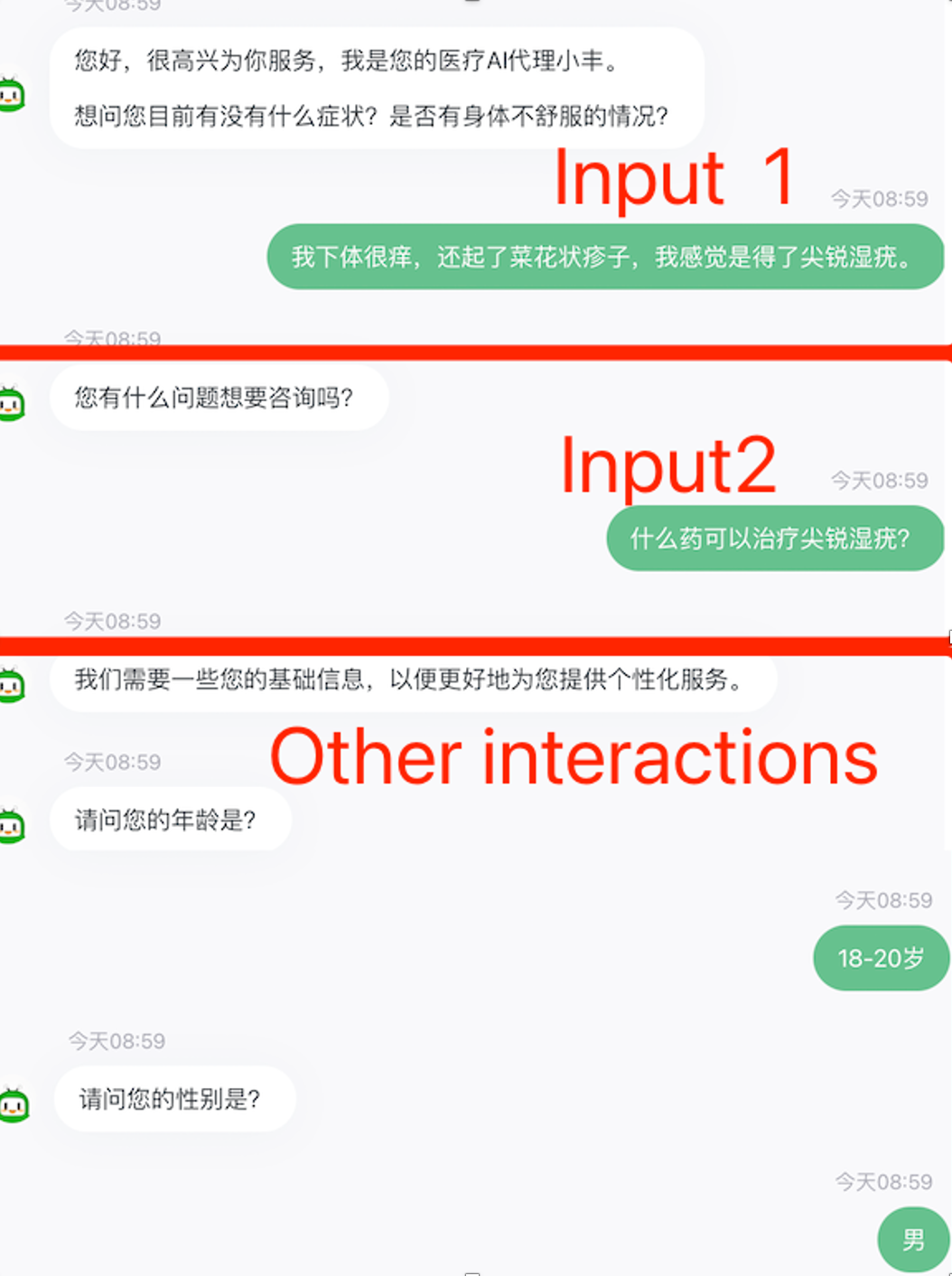

We designed a 2*(COVID-19/sexually transmitted disease) * 2 (non-empathy/empathy) * 2 (non-personalization/personalization) experiment. Participants were required to communicate with a health consultant chatbot established by Flow XO software. In the process, they were told to express their symptom to the chatbot and ask for advice.

To make sure of the stimulus, all the participants should have COVID-19 or had sexual experience within 6 months. They were radomly assigned into eight groups.

(Interaction steps)

What We Find

- According to the results, disease sensitivity and empathy do not significantly influence participants’ behavioral intention whereas personalized suggestion does.

- Under the chatbot’s personalized suggestion, participants feel a sense of emotional support and then increase their use intention for this chatbot.

The limitation of current chatbots’ empathy expression may lie in chatbots’ text-based communication features. We proposed that beyond the current empathy expression, there are some non-verbal empathy expressions for robots, which can provide a better empathic caring for users.

No responses yet